02-Install

Quorum 英 [ˈkwɔːrəm] 仲裁盘;法定人数;法定人數;一致性

peer 英 [pɪə(r)] n. 同龄人;同辈;身份(或地位)相同的人;(英国)贵族成员 vi. 仔细看;端详

1. Standalone

- 安装JDK1.8+,配置

JAVA_HOME(CentOS 6.10 64bit) - 配置主机名和IP映射

- 关闭防火墙 & 防火墙开机自启动

- 安装 & 启动Zookeeper

- 安装 & 启动 & 关闭Kafka

1. JDK

# 默认安装在 /usr目录下/usr/java/

rpm -ivh jdk-8u191-linux-x64.rpm

# 查询所有rpm安装文件

rpm -qa

# 查询jdk安装

rpm -qa | grep jdk

# 卸载jdk

rpm -e `rpm -qa | grep jdk`

# CentOS中rpm安装,即使不配JAVA_HOME、class_path,也可识别java、javac

java -version

# rpm安装java、javac都识别,jps命令不行

# 仍需配置环境变量

jps

# 环境变量

mca/01_linux/01_soft/01_jdk.md

# 修改主机名

cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=CentOS # 修改、重启即可

# Centos7以上

# 查看主机名

hostnamectl

# 设置主机名

hostnamectl set-hostname ooxx

# 域名mapping

vim /etc/hosts

192.168.52.129 ooxx

ping ooxx

# 防火墙

service iptables stop|status|start

# 开机自启删除

chkconfig iptables off

# 所有开机自启服务

chkconfig --list

chkconfig --list | grep iptables

# 重启服务器

reboot

2. Zookeeper

# -C解压到指定目录

tar -zxf zookeeper-3.4.6.tar.gz

ln -s /root/soft/zookeeper-3.4.6 /usr/local/zookeeper

cd /usr/local/zookeeper/conf

cp zoo_sample.cfg zoo.cfg

# 修改cfg(可不修改)

vim zoo.cfg

# dataDir=/tmp/zookeeper

dataDir=/root/data/zookeeper

cd /usr/local/zookeeper/bin

# 启动zookeeper

sh /usr/local/zookeeper/bin/zkServer.sh start zoo.cfg

# 停止zookeeper

sh /usr/local/zookeeper/bin/zkServer.sh stop zoo.cfg

# 查看zookeeper运行状态

[root@hecs-168322 bin]# sh /usr/local/zookeeper/bin/zkServer.sh status zoo.cfg

JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: standalone # 单机

[root@hecs-168322 bin]# jps

19089 QuorumPeerMain # zookeeper进程

19142 Jps

26426 jar

735 WrapperSimpleApp

3. Kafka

- Scala 2.12 - kafka_2.12-3.2.3.tgz (asc, sha512)

- 2.12代表kafka依赖的Scala版本

- 3.2.3代表kafka版本

tar -zxf kafka_2.11-2.2.0.tgz

ln -s /root/soft/kafka_2.11-2.2.0 /usr/local/kafka

# 修改cfg

cp /usr/local/kafka/config/server.properties /usr/local/kafka/config/server.properties.bak

vim /usr/local/kafka/config/server.properties

# 启动kafka

sh /usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties

sh /usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

# 停止kafka(异步)

sh /usr/local/kafka/bin/kafka-server-stop.sh

[root@hecs-168322 bin]# jps

19680 Kafka # kafka进程

19089 QuorumPeerMain

26426 jar

735 WrapperSimpleApp

19743 Jps

# 关机

shutdown -h now

1. server.properties

# 私网ip:port

listeners=PLAINTEXT://192.168.0.63:9092

# 公网ip:port(没有公网,这个不用配置)

advertised.listeners=PLAINTEXT://ip:9092

# 连接zookeeper公网ip:port

zookeeper.connect=ip:port

# 日志的保存时间(7 * 24 = 168)h。不改

log.retention.hours=168

4. 测试

cd /usr/local/kafka/bin

./kafka-topics.sh --help

# 创建topic(replication-factor不能大于brokers)

./kafka-topics.sh --bootstrap-server ip:9092 --create --topic t2 --partitions 3 --replication-factor 1

# consumer

# 1. 同一个group的consumer,轮循消费msg

# 2. 不同group的consumer,广播消费msg

./kafka-console-consumer.sh --bootstrap-server ip:9092 --topic t1 --group g1

# producer

./kafka-console-producer.sh --broker-list ip:9092 --topic t1

5. Attention

# 副本因子(replication_factor)不能大于brokers

[root@hecs-168322 bin]# ./kafka-topics.sh --bootstrap-server 192.168.*.*:9092 --create --topic topic01 --partitions 3 --replication-factor 2

Error while executing topic command : org.apache.kafka.common.errors.InvalidReplicationFactorException: Replication factor: 2 larger than available brokers: 1.

[2022-09-15 10:10:15,578] ERROR java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.InvalidReplicationFactorException: Replication factor: 2 larger than available brokers: 1.

at org.apache.kafka.common.internals.KafkaFutureImpl.wrapAndThrow(KafkaFutureImpl.java:45)

at org.apache.kafka.common.internals.KafkaFutureImpl.access$000(KafkaFutureImpl.java:32)

at org.apache.kafka.common.internals.KafkaFutureImpl$SingleWaiter.await(KafkaFutureImpl.java:89)

at org.apache.kafka.common.internals.KafkaFutureImpl.get(KafkaFutureImpl.java:260)

at kafka.admin.TopicCommand$AdminClientTopicService.createTopic(TopicCommand.scala:175)

at kafka.admin.TopicCommand$TopicService$class.createTopic(TopicCommand.scala:134)

at kafka.admin.TopicCommand$AdminClientTopicService.createTopic(TopicCommand.scala:157)

at kafka.admin.TopicCommand$.main(TopicCommand.scala:60)

at kafka.admin.TopicCommand.main(TopicCommand.scala)

Caused by: org.apache.kafka.common.errors.InvalidReplicationFactorException: Replication factor: 2 larger than available brokers: 1.

(kafka.admin.TopicCommand$)

2. kafka-eagle

tar -zxf kafka-eagle-bin-1.4.0.tar.gz

tar -zxf kafka-eagle-web-1.4.0-bin.tar.gz

ln -s /Users/list/soft/kafka-eagle-bin-1.4.0/kafka-eagle-web-1.4.0 /usr/local/kafka-eagle

# 环境变量

vi /etc/profile

export KE_HOME=/usr/local/kafka-eagle

source /etc/profile

# 修改cfg

cp /usr/local/kafka-eagle/conf/system-config.properties /usr/local/kafka-eagle/conf/system-config.properties.bak

vim /usr/local/kafka-eagle/conf/system-config.properties

# 开启JMX

# 授权&启动

cd /usr/local/kafka-eagle/bin

chmod u+x ke.sh

sh /usr/local/kafka-eagle/bin/ke.sh start

1. system-config.properties

# 1. 只保留一个cluster1

kafka.eagle.zk.cluster.alias=cluster1

cluster1.zk.list=127.0.0.1:2181

#cluster2.zk.list=xdn10:2181,xdn11:2181,xdn12:2181

# 2. 注释掉zk

cluster1.kafka.eagle.offset.storage=kafka

#cluster2.kafka.eagle.offset.storage=zk

# 3. 报表图,默认false。kafka必须开启JMS管理端口

kafka.eagle.metrics.charts=true

kafka.eagle.metrics.retain=30

# 4. cluster2注掉

#cluster2.kafka.eagle.sasl.enable=false

#cluster2.kafka.eagle.sasl.protocol=SASL_PLAINTEXT

#cluster2.kafka.eagle.sasl.mechanism=PLAIN

#cluster2.kafka.eagle.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="kafka-eagle";

#cluster2.kafka.eagle.sasl.client.id=

# 5. sqlite注掉

#kafka.eagle.driver=org.sqlite.JDBC

#kafka.eagle.url=jdbc:sqlite:/hadoop/kafka-eagle/db/ke.db

#kafka.eagle.username=root

#kafka.eagle.password=www.kafka-eagle.org

# 6. mysql连接。启动eagle时,ke数据库自动创建

kafka.eagle.driver=com.mysql.jdbc.Driver

kafka.eagle.url=jdbc:mysql://ip:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

kafka.eagle.username=root

kafka.eagle.password=******

# 删除topic,二次认证用

kafka.eagle.topic.token=keadmin

2. 开启JMX

# Cluster都要进行开启

vim /usr/local/kafka/bin/kafka-server-start.sh

```

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

# 修改kafka_JVM

export KAFKA_HEAP_OPTS="-Xmx256M -Xms128M"

# 开启JMX

export JMX_PORT="7788"

fi

```

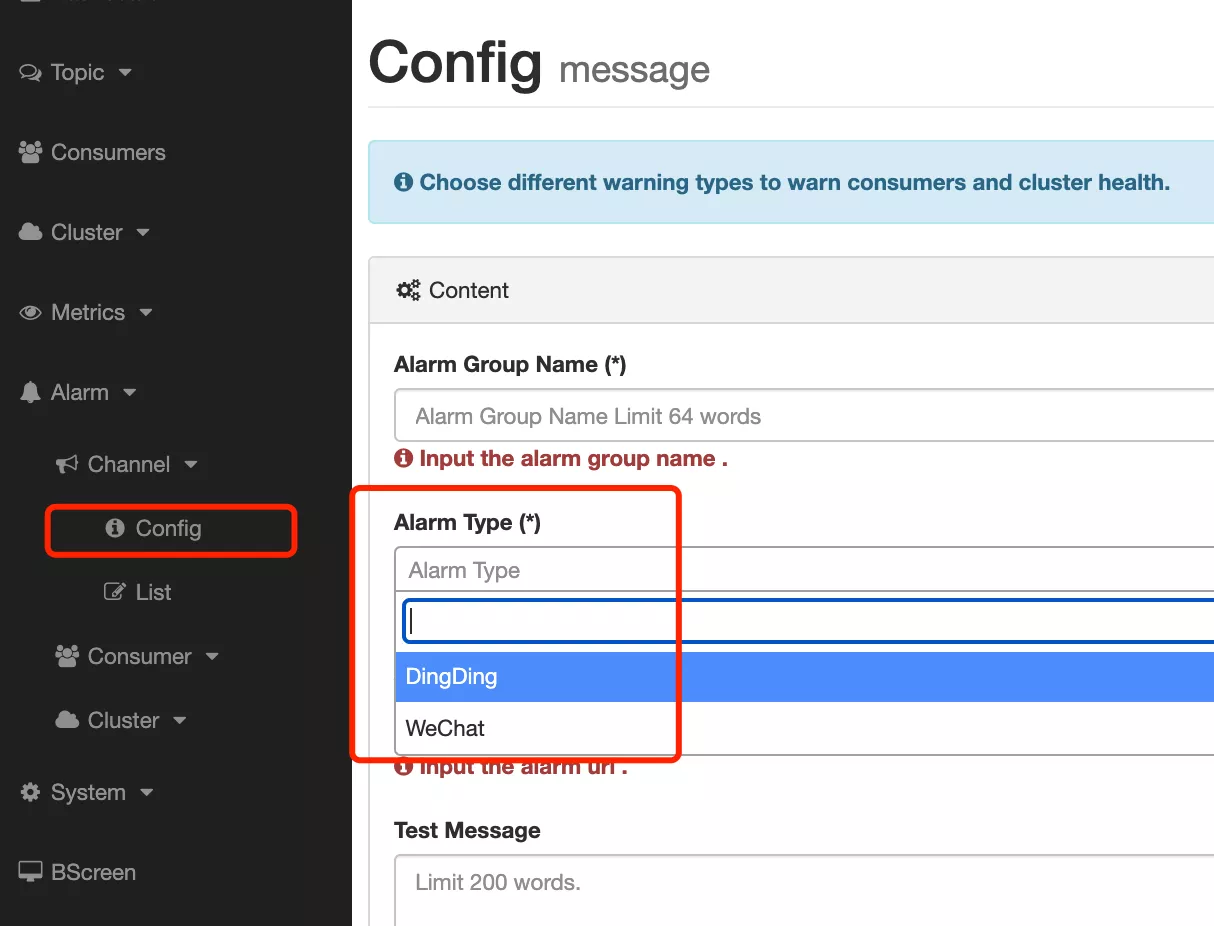

3. 使用

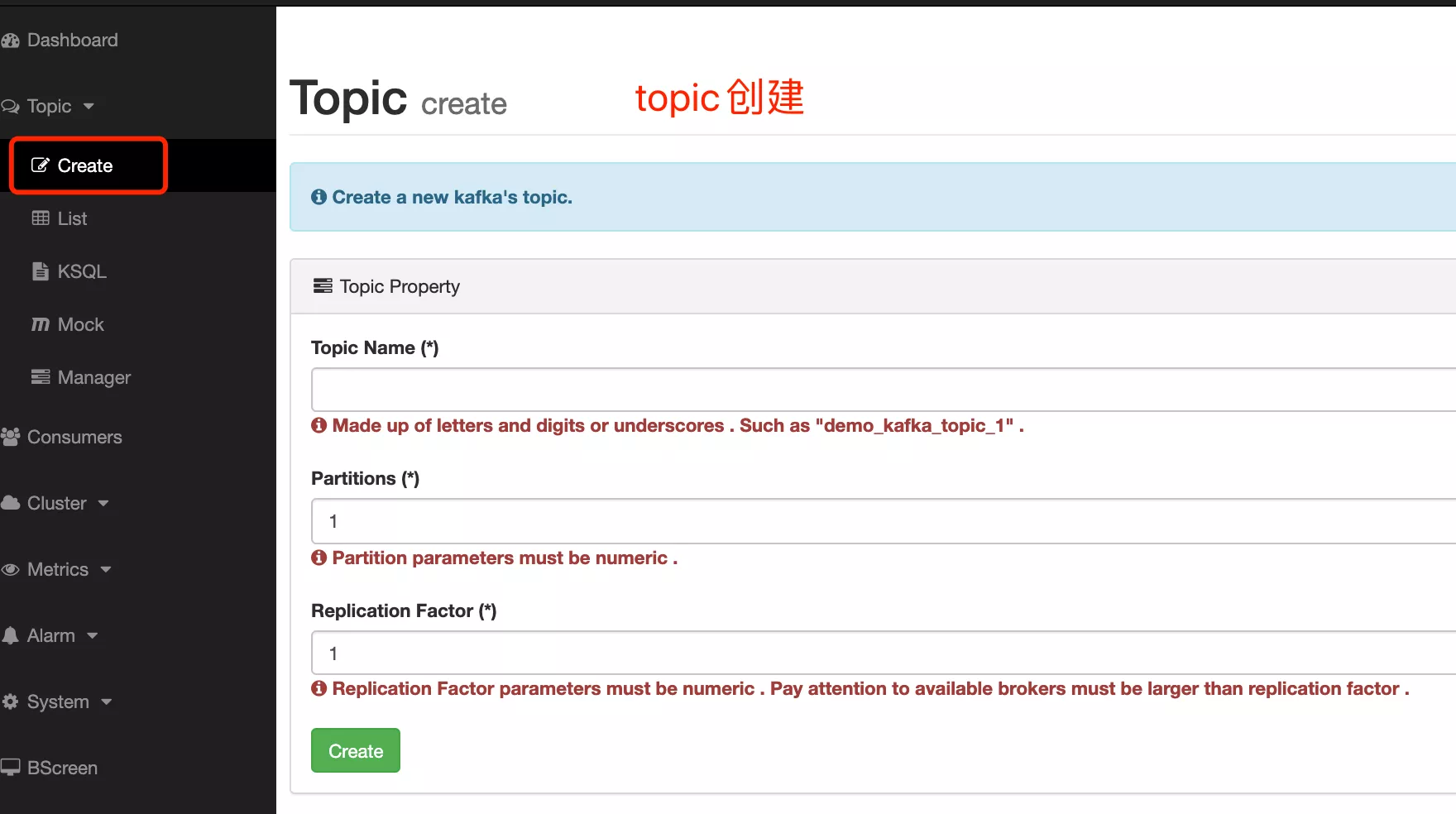

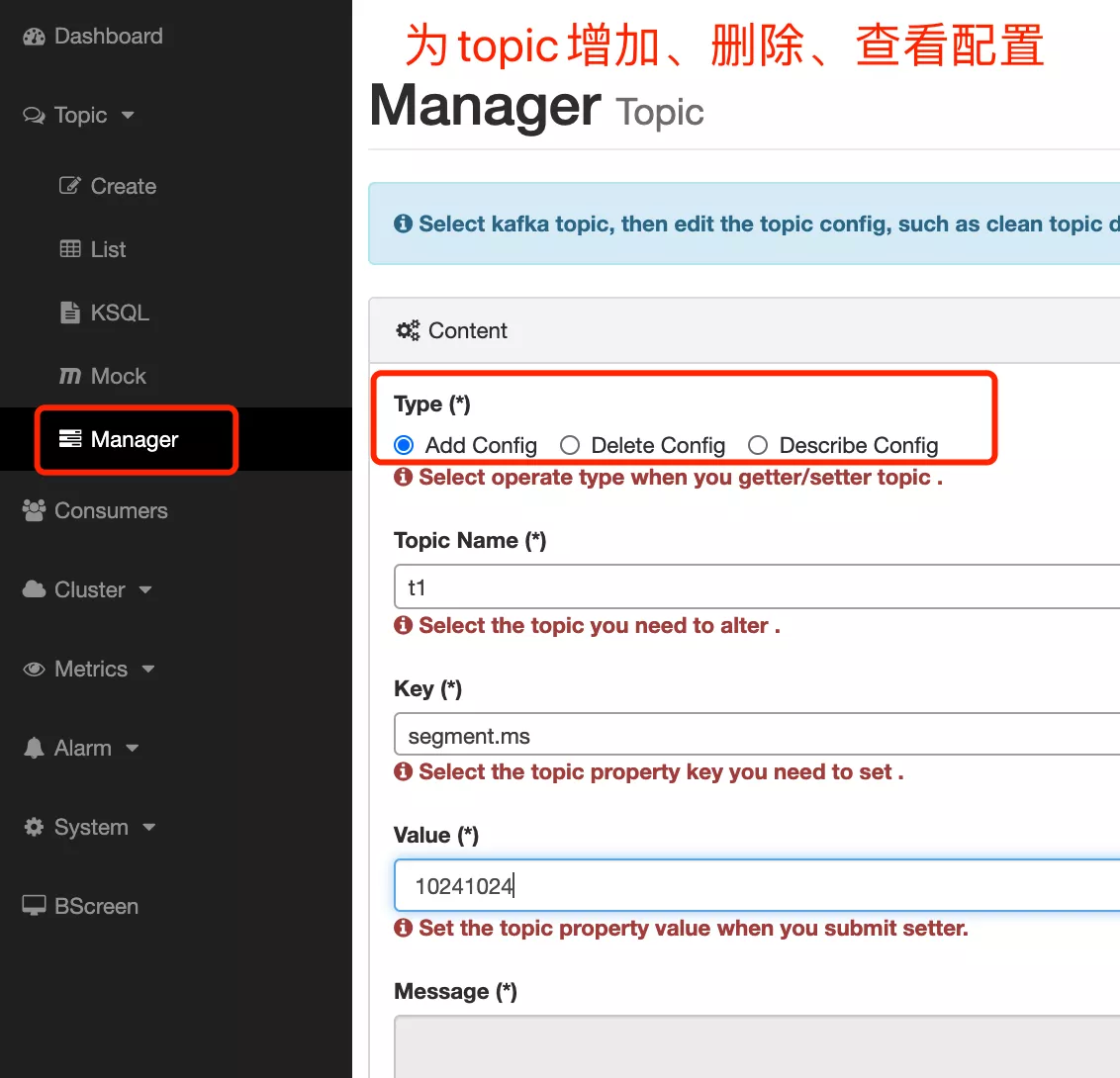

1. topic

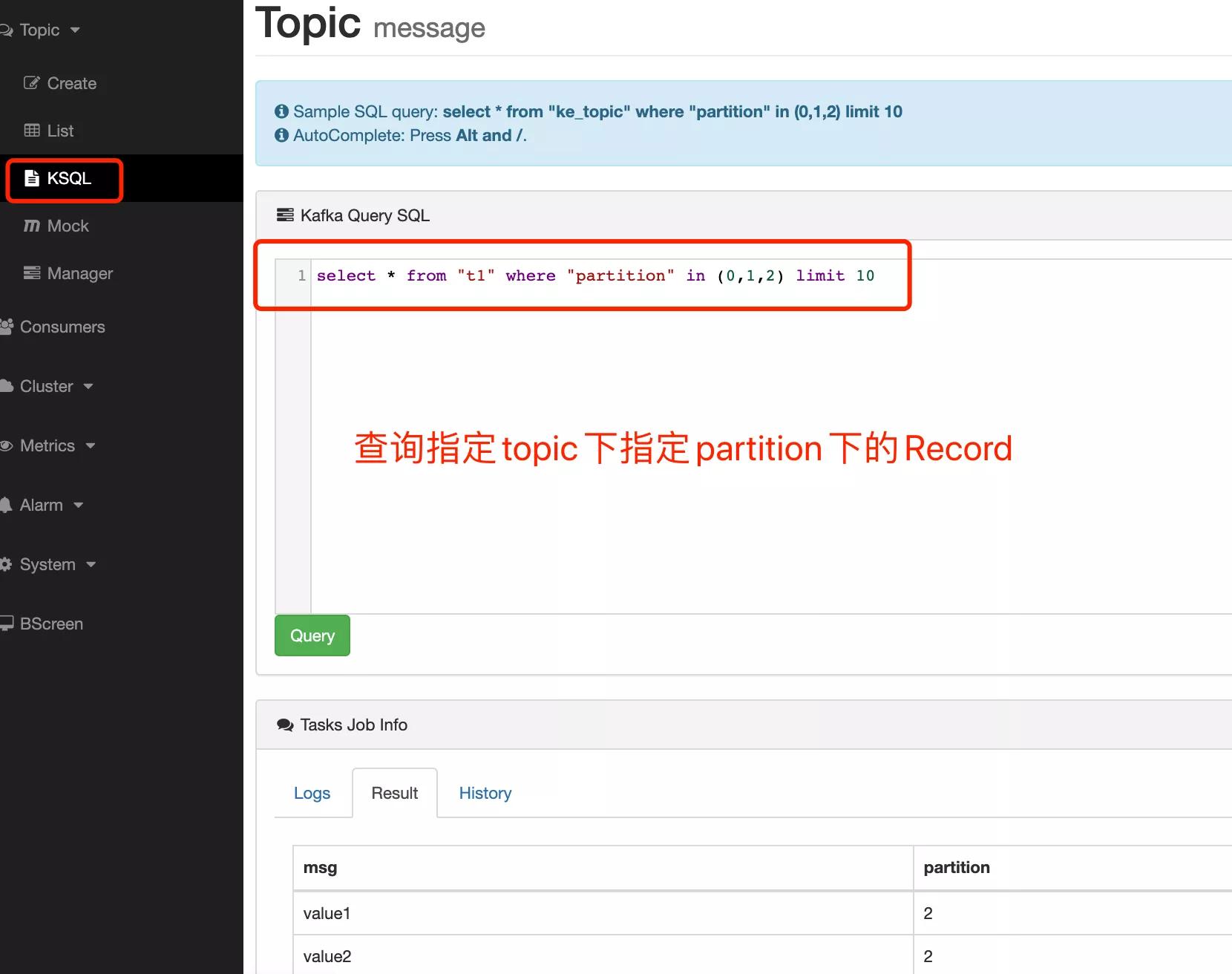

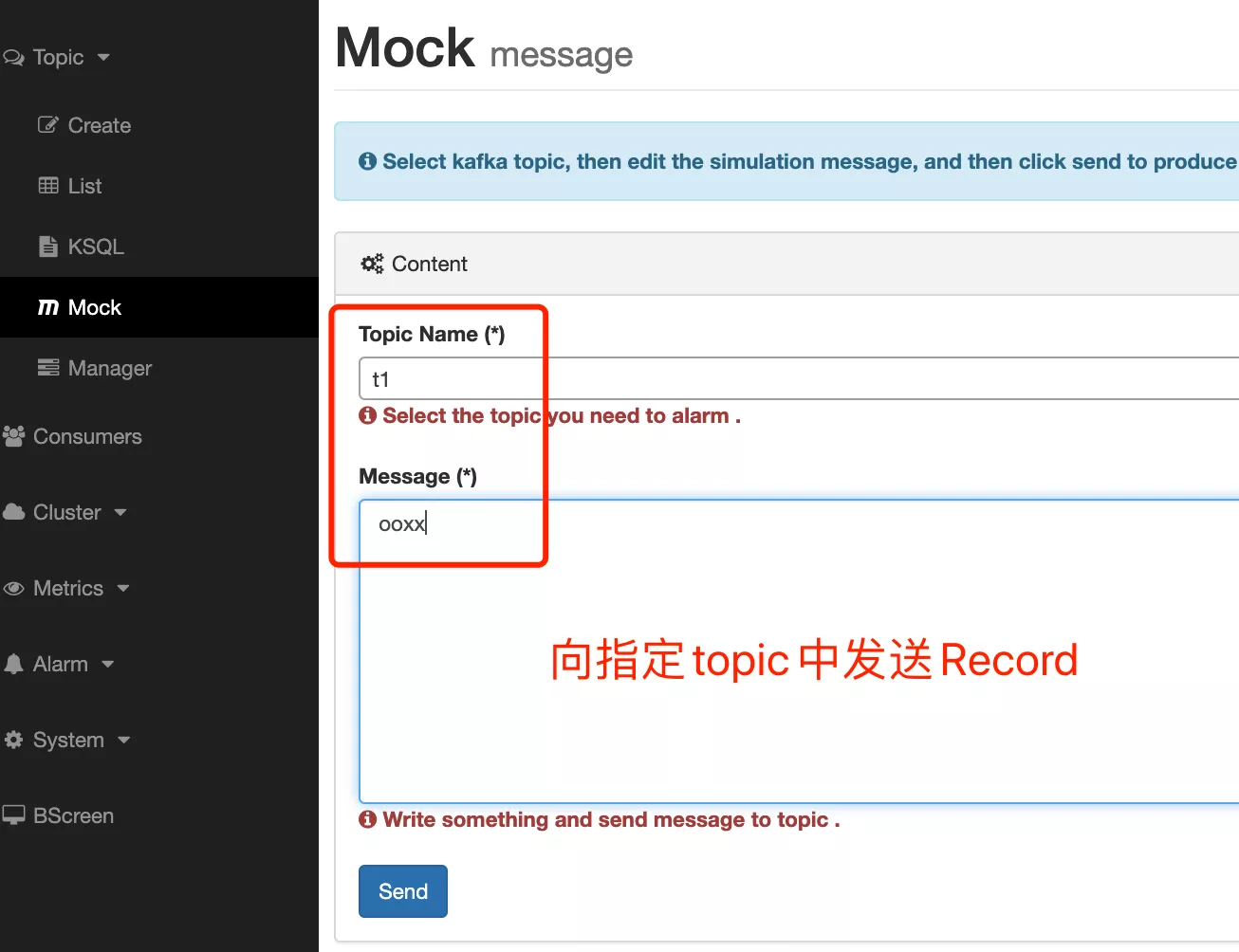

2. Record

3. Alerm

- 告警,需要对接微信、钉钉

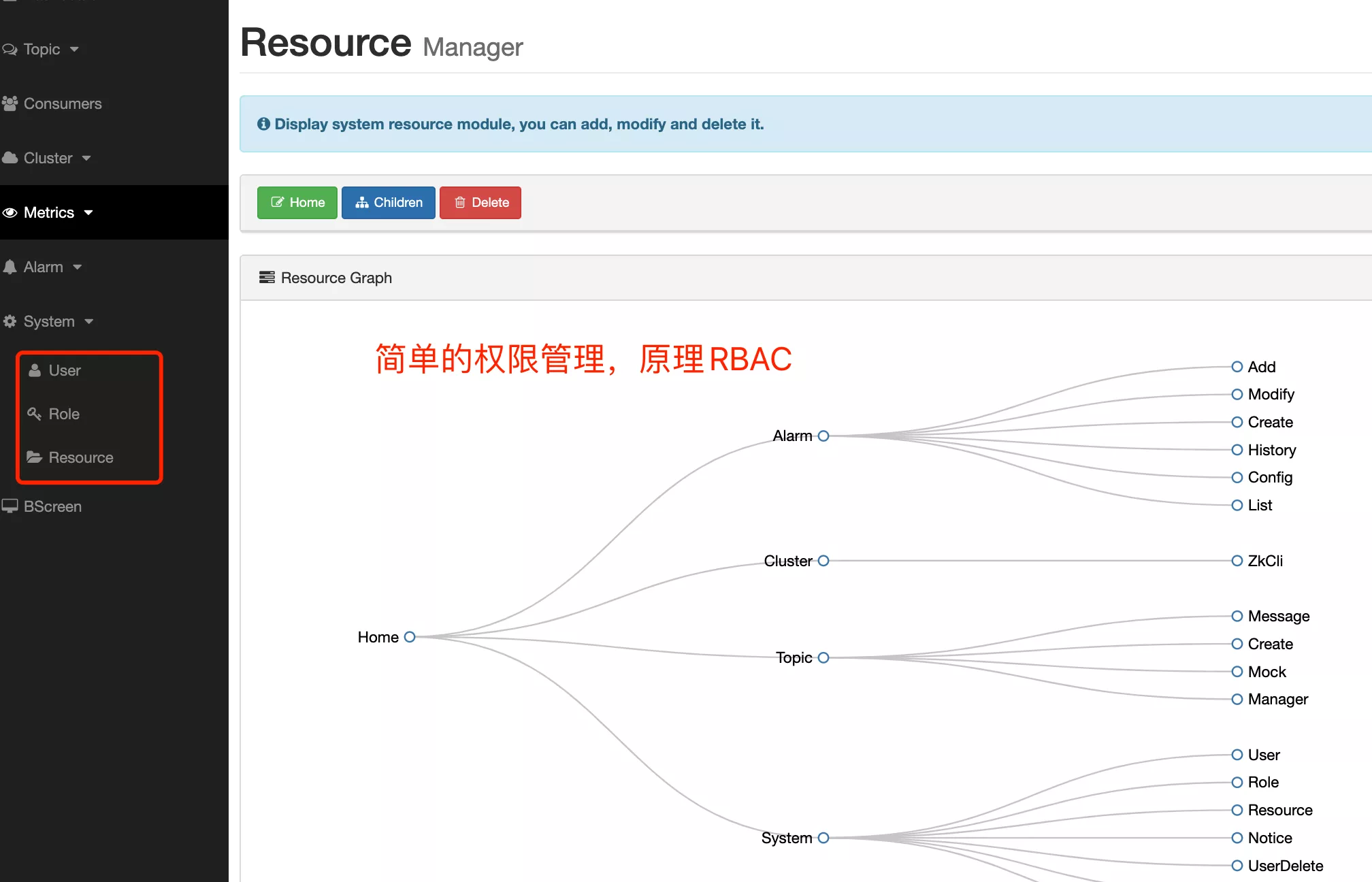

4. System

3. Cluster

- 安装JDK1.8+,配置

JAVA_HOME(CentOS 6.10 64bit) - 配置主机名和IP映射

- 关闭防火墙 & 防火墙开机自启动

- 同步时钟

ntpdate cn.pool.ntp.org | ntp[1-7].aliyun.com - 安装 & 启动Zookeeper

- 安装 & 启动 & 关闭Kafka

# 1. install JDK

rpm -ivh jdk-8u191-linux-x64.rpm

# 2. hosts

vim /etc/hosts

```

192.168.52.130 CentOSA

192.168.52.131 CentOSB

192.168.52.132 CentOSC

```

# 3. 环境变量

# 4. firewall

service iptables stop|status|start

# 开机自启删除

chkconfig iptables off

# 所有开机自启的服务

chkconfig --list

chkconfig --list | grep iptables

# 5. 时钟同步

yum install ntp -y

# cn.pool.ntp.org | ntp[1-7].aliyun.com 为时钟同步服务器

ntpdate ntp1.aliyun.com

clock -w

1. Zookeeper

tar -zxf zookeeper-3.4.6.tar.gz

ln -s /root/soft/zookeeper-3.4.6 /usr/local/zookeeper

cd /usr/local/zookeeper/conf

cp zoo_sample.cfg zoo.cfg

# 修改cfg

vim zoo.cfg

# 将服务的id号写入数据目录

# myid文件和服务对应

echo 1 > /usr/local/zookeeper/myid

echo 2 > /usr/local/zookeeper/myid

echo 3 > /usr/local/zookeeper/myid

cd /usr/local/zookeeper/bin

# 启动zookeeper

sh /usr/local/zookeeper/bin/zkServer.sh start zoo.cfg

# 停止zookeeper

sh /usr/local/zookeeper/bin/zkServer.sh stop zoo.cfg

1. zoo.cfg

# 修改数据目录(也可以不改)

dataDir=/root/data/zookeeper

# 2888数据同步端口

# 3888主从选举端口

server.1=CentOSA:2888:3888

server.2=CentOSB:2888:3888

server.3=CentOSC:2888:3888

2. Kafka

# 修改cfg

vim /usr/local/kafka/config/server.properties

1. server.properties

# CentOSA

broker.id=0

listeners=PLAINTEXT://CentOSA:9092

# 持久化日志目录(选改)

log.dirs=/root/log/kafka-logs

zookeeper.connect=CentOSA:2181,CentOSB:2181,CentOSC:2181

# 日志的保存时间(7 * 24 = 168)h,不用修改

log.retention.hours=168

# ---------------------------------------------------------

# CentOSB

broker.id=1

listeners=PLAINTEXT://CentOSB:9092

# ---------------------------------------------------------

# CentOSC

broker.id=2

listeners=PLAINTEXT://CentOSC:9092