03-Cache_DB

least 英 [liːst] adv. 最小;最少;微不足道 adj. 小的;比较小的; little的最高级 n. 最小

eviction 英 [ɪˈvɪkʃn] n. 驱逐;驱赶

1. Cache

1. expire

TTL:Time To Live

# 1. 设置过期时间(单位s)。不会随着访问重置TTL

127.0.0.1:6379># set k1 v1 ex 30

OK

127.0.0.1:6379># expire k1 50

(integer) 1

127.0.0.1:6379> expire k2 50

(integer) 0 ------------>>>>>>>>>> # key不存在,或无法设置

127.0.0.1:6379># SETEX k1 20000 v1

OK

# 2.1. 设置时间戳

expireat k1 1680607272

# 2.2. 当前时间戳

127.0.0.1:6379># TIME

1) "1680607272"

2) "340005"

# 3.1. 设置-1

127.0.0.1:6379># PERSIST k1

(integer) 1

# 3.2. 重写操作,TTL -1

127.0.0.1:6379># SET k1 v1

OK

# 3. 查询过期时间

# -1:永久(默认)

# -2:key不存在

ttl k1

2. 淘汰过期的keys

- 被动访问判定。Client再次访问判定并删除(稍微牺牲内存,换取性能)

- 主动周期轮循判定。平凡概率算法

- Redis每秒10次。随机20个keys,删除已过期,过期>25%,重复随机删除

- 内存不足,触发淘汰策略

3. 淘汰策略

回收策略

LRU: Least Recently Used(最近使用算法,注重于时间)LFU: Least Frequently Used(最不常用算法,注重访问频率)

# maxmemory <bytes> => redis可用的最大内存

# MAXMEMORY POLICY: how Redis will select what to remove when maxmemory

# is reached. You can select one from the following behaviors:

#

# volatile-lru -> Evict using approximated LRU, only keys with an expire set.

# allkeys-lru -> Evict any key using approximated LRU.

# volatile-lfu -> Evict using approximated LFU, only keys with an expire set.

# allkeys-lfu -> Evict any key using approximated LFU.

# volatile-random -> Remove a random key having an expire set.

# allkeys-random -> Remove a random key, any key.

# volatile-ttl -> Remove the key with the nearest expire time (minor TTL)

# noeviction -> Don't evict anything, just return an error on write operations.

#

# LRU means Least Recently Used

# LFU means Least Frequently Used

#

# Both LRU, LFU and volatile-ttl are implemented using approximated

# randomized algorithms.

#

# Note: with any of the above policies, when there are no suitable keys for

# eviction, Redis will return an error on write operations that require

# more memory. These are usually commands that create new keys, add data or

# modify existing keys. A few examples are: SET, INCR, HSET, LPUSH, SUNIONSTORE,

# SORT (due to the STORE argument), and EXEC (if the transaction includes any

# command that requires memory).

#

# The default is:

#

# maxmemory-policy noeviction

- noeviction,缓存不应使用,数据库一定使用。数据库不能清理旧数据

- 缓存大量使用了ttl,应该使用

volatile-lru。否则使用allkeys-lru

allkeys:针对所有keyvolatile:针对设置了过期时间的key

- noeviction(默认): 返回错误。当内存限制达到,并且客户端继续尝试写入

- allkeys-lru: 尝试回收最近最少使用的键(LRU)

- allkeys-random: 回收随机的键

- volatile-lru: 尝试回收最近最少使用的键(LRU),但仅限于在过期集合的键

- volatile-random: 回收随机的键,但仅限于在过期集合的键。

- volatile-ttl: 优先回收存活时间(TTL)较短的键

4. 有效期场景

- 业务逻辑:今天的交易额,12点一过就算出来了,只用一天。业务来决定Redis里的数据

- 业务运转:程序员决定数据的冷、热规则。冷数据淘汰

2. DB

- 缓存:热数据,可以丢(掉电易失) => 追求急速!!!

- 数据库:全量数据,绝对不能丢的 => 持久性

- redis + mysql => 速度 + 持久性

- 数据一致性问题,不要强一致性(不要同一transaction),中间加消息队列,异步写入mysql

存储层都需要持久化(非阻塞redis实现数据落地)

- 快照(snapshot),副本 => RDB

- 日志(log) => AOF

1. pipeline

|前面命令的输出,作为后面命令的输入- 管道会触发创建子进程。

|左右两边都会创建子进程,一共3个进程(bash为父进程) - 优先级

$$ > pipeline > $BASHPID

# 1.1. 内容太多了

ls -l /etc

# 1.2. 分屏,space下一屏

ls -l /etc | more

# 2.1. 定义变量

num=0

echo $num

# 2.2. 数值计算

((num++))

echo $num

# 2.3. num仍然是1

((num++)) | echo ok

echo $num

# 3.1. 当前进程id

echo $$ # $$ = $BASHPID

# 3.2. 打印bash进程id

echo $$ | more

# 3.3. 打印子进程id

echo $BASHPID | more

echo $BASHPID

# 打印子进程id

echo $BASHPID | more

2. 父子进程

- linux子进程和父进程数据隔离

export环境变量,子进程获取父进程数据

# 当前进程id

[root@hecs-168322 ~]# echo $$

25125

# 当前进程自定义变量

[root@hecs-168322 ~]# num=1

[root@hecs-168322 ~]# echo $num

1

# 1. 开启子进程

[root@hecs-168322 ~]# /bin/bash

[root@hecs-168322 ~]# echo $$

25144

# 2. 进程关系树

[root@hecs-168322 ~]# pstree

systemd─┬─NetworkManager─┬─dhclient

│ └─2*[{NetworkManager}]

├─2*[agetty]

├─atd

├─auditd───{auditd}

├─chronyd

├─crond

├─dbus-daemon

├─hostguard───19*[{hostguard}]

├─hostwatch───2*[{hostwatch}]

├─master─┬─pickup

│ └─qmgr

├─polkitd───6*[{polkitd}]

├─rsyslogd───2*[{rsyslogd}]

├─sshd───sshd───bash───bash───pstree # 子进程

├─systemd-journal

├─systemd-logind

├─systemd-udevd

├─tuned───4*[{tuned}]

├─uniagent───8*[{uniagent}]

└─wrapper─┬─java───13*[{java}]

└─{wrapper}

# 3. 子进程获取不到变量

[root@hecs-168322 ~]# echo $num

# 4. 回到父进程,可取变量

[root@hecs-168322 ~]# exit

exit

[root@hecs-168322 ~]# echo $num

1

# 5. export后num子进程可见

[root@hecs-168322 ~]# export num

[root@hecs-168322 ~]# /bin/bash

[root@hecs-168322 ~]# echo $$

25161

[root@hecs-168322 ~]# echo $num

1

export环境变量后,父子进程数据再修改不互通

# 1.1. 编辑脚本

[root@hecs-168322 tmp]# vim test.sh

# 1.2. 脚本赋权

[root@hecs-168322 tmp]# chmod +x test.sh

[root@hecs-168322 tmp]# ll

total 4

-rwxr-xr-x 1 root root 168 Apr 4 23:07 test.sh

# 2. 父进程创建变量并export

[root@hecs-168322 tmp]# echo $$

25253

[root@hecs-168322 tmp]# num=1

[root@hecs-168322 tmp]# echo $num

1

[root@hecs-168322 tmp]# export num

# 3.1. 后台执行脚本

[root@hecs-168322 tmp]# ./test.sh &

[1] 25283

[root@hecs-168322 tmp]# 25283

1

num:99 # 3.2. 子进程修改变量

# 4.1. 父进程变量不变

[root@hecs-168322 tmp]# echo $num

1

# 4.2. 父进程修改变量

[root@hecs-168322 tmp]# num=888

# 5. 子进程变量不变

[root@hecs-168322 tmp]# 99

[1]+ Done ./test.sh

test.sh => #!/bin/bash => 开启bash子进程

#!/bin/bash

echo $$

echo $num

num=99

echo num:$num

# 子进程sleep,父进程查看是否变更

sleep 20

# 父进程变更,子进程查看是否变更

echo $num

3. fork()

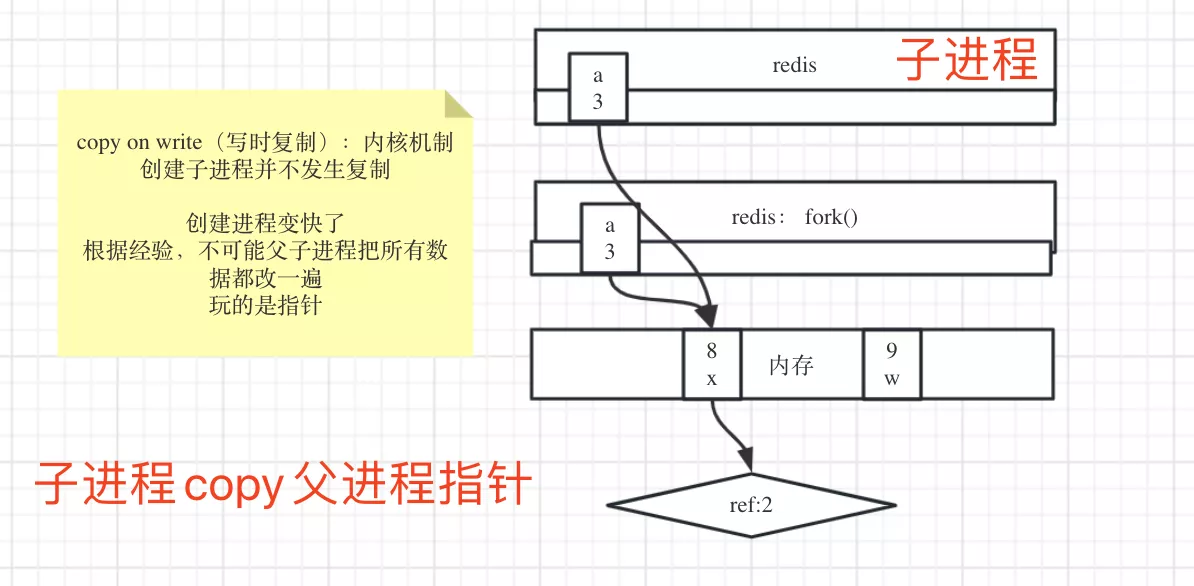

fork()为系统调用。内核来保证复制指针的时点性- copy on write(写时复制)—— 内核机制

- 速度快。创建子进程并不复制

- 空间小。数据改变时,copy引用

man 2 fork

FORK(2) Linux Programmer's Manual

NAME

fork - create a child process

SYNOPSIS

#include <unistd.h>

pid_t fork(void);

// ...

NOTES

Under Linux, fork() is implemented using /*copy-on-write pages*/, so the only penalty that it incurs is the time and memory required to duplicate the parent's page tables, and to create a unique task structure for the child.

4. RDB

- Redis-DB

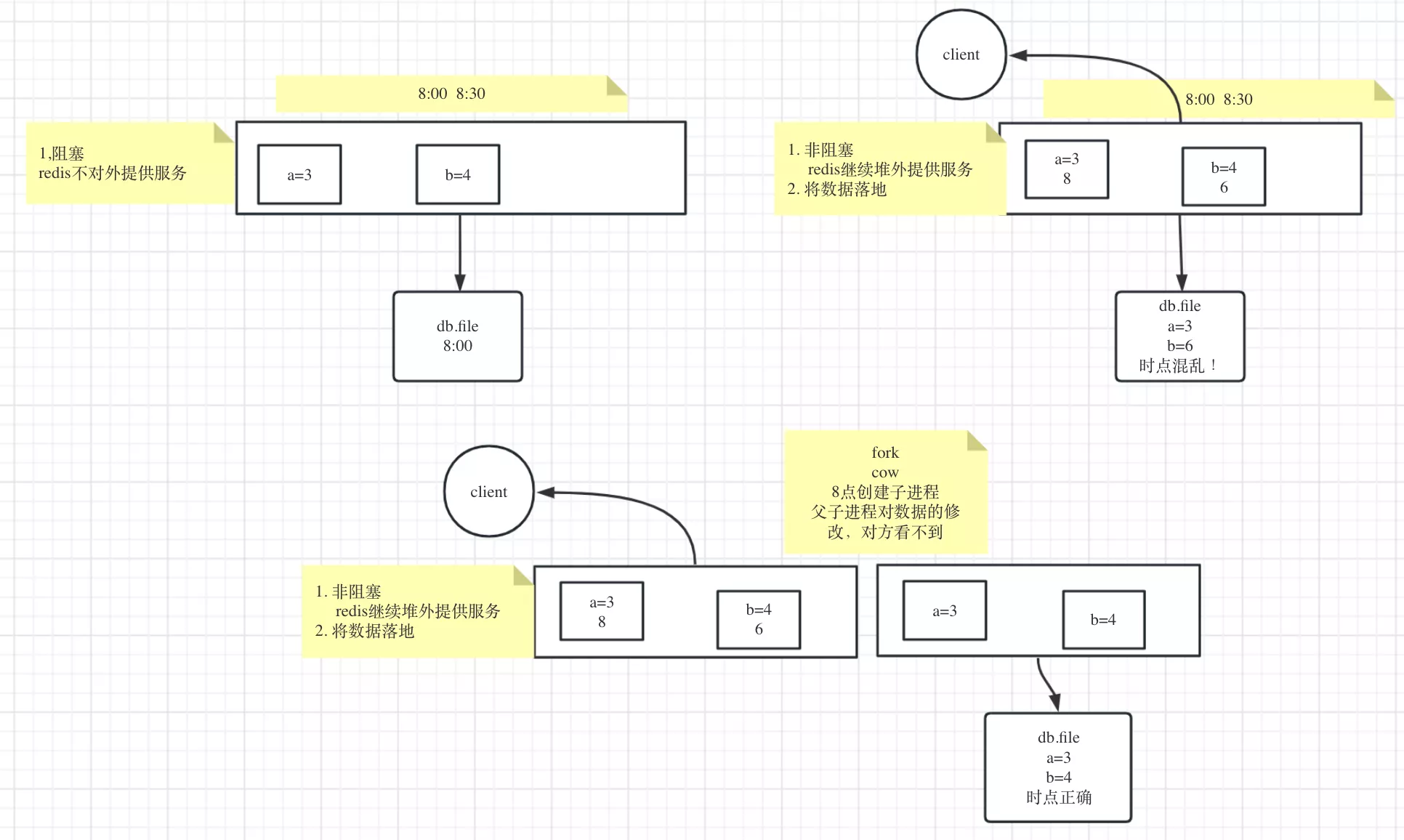

- 通过

fork()系统调用,解决《时点混乱》 save- 阻塞。eg:关机维护

bgsave- 异步、非阻塞。

fork()创建子进程

- 异步、非阻塞。

- RDB(bgsave)策略及配置

- 配置文件中使用的是

save标识

- 配置文件中使用的是

- 弊端

- 不支持拉链,只有一个

dump.rdb,后面的覆盖前面的。不能回滚到指定时间点 - 丢失的数据相对多一些

- 时点与时点间窗口数据容易丢失。8点得到一个RDB,9点刚要再得到一个,挂机了

- 不支持拉链,只有一个

- 优点:恢复快。类似java序列化(二进制。不需要像json,还要解析数据)

6379.conf

# 1. 触发strategy

# 1.1. 标识为save,实际触发为bgsave

save 900 1 # 900s,1个changed

save 300 10 # 300s,10个changed

save 60 10000 # 60s,10000个changed

# 1.2. 关闭RDB。无配置或""

save ""

# 2. 是否压缩

rdbcompression

# 3. 校验位

rdbchecksum yes

# 4. 文件名

dbfilename dump.rdb

# 5. 文件位置

dir /var/lib/redis/6379

################################ SNAPSHOTTING ################################

# Save the DB to disk.

#

# save <seconds> <changes>

#

# Redis will save the DB if both the given number of seconds and the given

# number of write operations against the DB occurred.

#

# Snapshotting can be completely disabled with a single empty string argument

# as in following example:

#

# save ""

#

# Unless specified otherwise, by default Redis will save the DB:

# * After 3600 seconds (an hour) if at least 1 key changed

# * After 300 seconds (5 minutes) if at least 100 keys changed

# * After 60 seconds if at least 10000 keys changed

#

# You can set these explicitly by uncommenting the three following lines.

#

# save 3600 1

# save 300 100

# save 60 10000

# By default Redis will stop accepting writes if RDB snapshots are enabled

# (at least one save point) and the latest background save failed.

# This will make the user aware (in a hard way) that data is not persisting

# on disk properly, otherwise chances are that no one will notice and some

# disaster will happen.

#

# If the background saving process will start working again Redis will

# automatically allow writes again.

#

# However if you have setup your proper monitoring of the Redis server

# and persistence, you may want to disable this feature so that Redis will

# continue to work as usual even if there are problems with disk,

# permissions, and so forth.

stop-writes-on-bgsave-error yes

# Compress string objects using LZF when dump .rdb databases?

# By default compression is enabled as it's almost always a win.

# If you want to save some CPU in the saving child set it to 'no' but

# the dataset will likely be bigger if you have compressible values or keys.

rdbcompression yes

# Since version 5 of RDB a CRC64 checksum is placed at the end of the file.

# This makes the format more resistant to corruption but there is a performance

# hit to pay (around 10%) when saving and loading RDB files, so you can disable it

# for maximum performances.

#

# RDB files created with checksum disabled have a checksum of zero that will

# tell the loading code to skip the check.

rdbchecksum yes

# Enables or disables full sanitation checks for ziplist and listpack etc when

# loading an RDB or RESTORE payload. This reduces the chances of a assertion or

# crash later on while processing commands.

# Options:

# no - Never perform full sanitation

# yes - Always perform full sanitation

# clients - Perform full sanitation only for user connections.

# Excludes: RDB files, RESTORE commands received from the master

# connection, and client connections which have the

# skip-sanitize-payload ACL flag.

# The default should be 'clients' but since it currently affects cluster

# resharding via MIGRATE, it is temporarily set to 'no' by default.

#

# sanitize-dump-payload no

# The filename where to dump the DB

dbfilename dump.rdb

# Remove RDB files used by replication in instances without persistence

# enabled. By default this option is disabled, however there are environments

# where for regulations or other security concerns, RDB files persisted on

# disk by masters in order to feed replicas, or stored on disk by replicas

# in order to load them for the initial synchronization, should be deleted

# ASAP. Note that this option ONLY WORKS in instances that have both AOF

# and RDB persistence disabled, otherwise is completely ignored.

#

# An alternative (and sometimes better) way to obtain the same effect is

# to use diskless replication on both master and replicas instances. However

# in the case of replicas, diskless is not always an option.

rdb-del-sync-files no

# The working directory.

#

# The DB will be written inside this directory, with the filename specified

# above using the 'dbfilename' configuration directive.

#

# The Append Only File will also be created inside this directory.

#

# Note that you must specify a directory here, not a file name.

dir ./

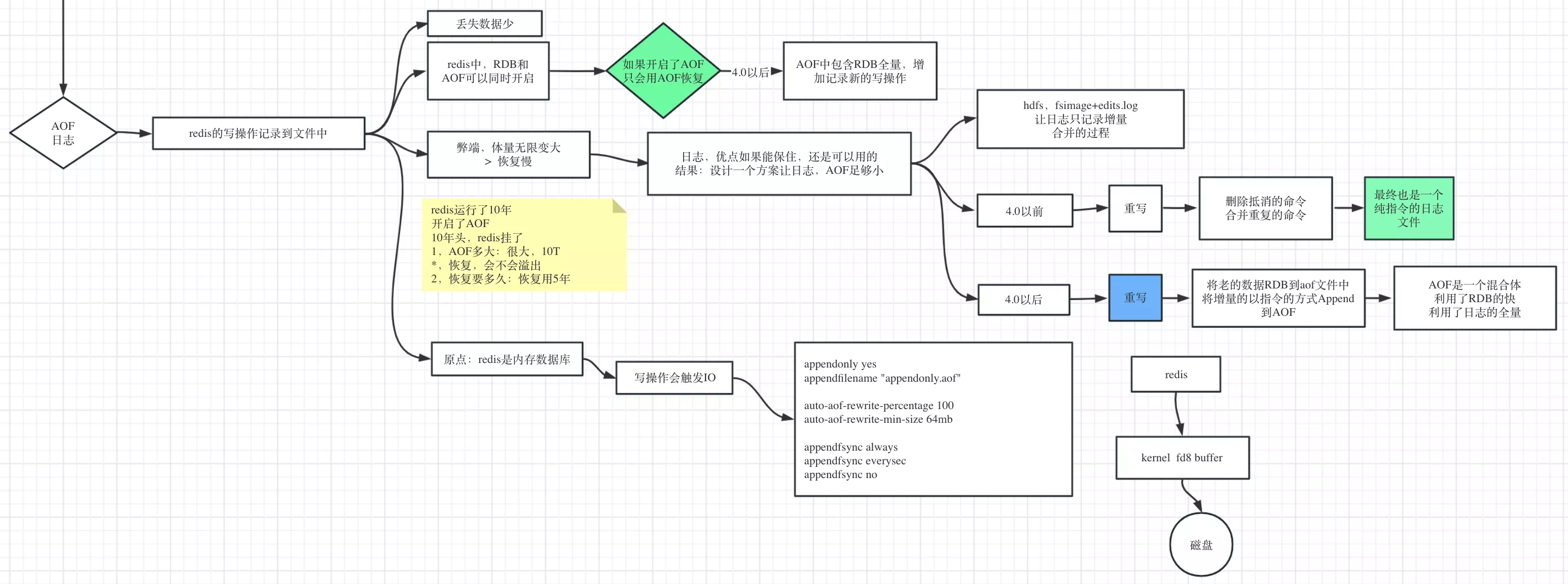

5. AOF

append-only-file

- 丢失数据少

- Redis中,RDB和AOF可以同时开启

- 如果开启了AOF,只会用AOF恢复

- 4.0以后。AOF中包含RDB全量,增加记录新的写操作

- 弊端,体量无限变大。恢复慢

- Redis运行了10年,AOF10T。恢复会不会溢出?恢复用5年?

- 4.0以前。删除抵消的命令,合并重复的命令。最终也是一个纯指令的日志文件

- 4.0以后。老的数据RDB到aof文件中,将增量数据以指令的方式Append到AOF。AOF是一个混合体,利用了RDB的快,利用了日志的全量

# 1. 开启AOF,默认关闭

appendonly yes

# 2. 触发strategy

auto-aof-rewrite-precentage 100

auto-aof-rewrite-min-size 64mb

# 3. 名称。路径和.rdb一致

appendfilename "appendonly.aof"

# 4. 三个级别。kernel_buffer刷出策略

appendfsync always

appendfsync everysec

appendfsync no

# 5. 子进程在RDB时,主进程是否AOF

no-appendfsync-on-rewrite no

# 6. 检查

aof-load-truncated yes

# 7. 混合AOF

# 注释中 => AOF file starts with the "REDIS"

aof-use-rdb-preamble yes

- 所有对磁盘IO操作都要调用kernel。进程的fd会开辟一个buffer

- appendfsync相当于buffer的flush。no级别完全根据kernel什么时候flush,可能丢一个buffer

############################## APPEND ONLY MODE ###############################

# By default Redis asynchronously dumps the dataset on disk. This mode is

# good enough in many applications, but an issue with the Redis process or

# a power outage may result into a few minutes of writes lost (depending on

# the configured save points).

#

# The Append Only File is an alternative persistence mode that provides

# much better durability. For instance using the default data fsync policy

# (see later in the config file) Redis can lose just one second of writes in a

# dramatic event like a server power outage, or a single write if something

# wrong with the Redis process itself happens, but the operating system is

# still running correctly.

#

# AOF and RDB persistence can be enabled at the same time without problems.

# If the AOF is enabled on startup Redis will load the AOF, that is the file

# with the better durability guarantees.

#

# Please check https://redis.io/topics/persistence for more information.

appendonly no

# The name of the append only file (default: "appendonly.aof")

appendfilename "appendonly.aof"

# The fsync() call tells the Operating System to actually write data on disk

# instead of waiting for more data in the output buffer. Some OS will really flush

# data on disk, some other OS will just try to do it ASAP.

#

# Redis supports three different modes:

#

# no: don't fsync, just let the OS flush the data when it wants. Faster.

# always: fsync after every write to the append only log. Slow, Safest.

# everysec: fsync only one time every second. Compromise.

#

# The default is "everysec", as that's usually the right compromise between

# speed and data safety. It's up to you to understand if you can relax this to

# "no" that will let the operating system flush the output buffer when

# it wants, for better performances (but if you can live with the idea of

# some data loss consider the default persistence mode that's snapshotting),

# or on the contrary, use "always" that's very slow but a bit safer than

# everysec.

#

# More details please check the following article:

# http://antirez.com/post/redis-persistence-demystified.html

#

# If unsure, use "everysec".

# appendfsync always

appendfsync everysec

# appendfsync no

# When the AOF fsync policy is set to always or everysec, and a background

# saving process (a background save or AOF log background rewriting) is

# performing a lot of I/O against the disk, in some Linux configurations

# Redis may block too long on the fsync() call. Note that there is no fix for

# this currently, as even performing fsync in a different thread will block

# our synchronous write(2) call.

#

# In order to mitigate this problem it's possible to use the following option

# that will prevent fsync() from being called in the main process while a

# BGSAVE or BGREWRITEAOF is in progress.

#

# This means that while another child is saving, the durability of Redis is

# the same as "appendfsync none". In practical terms, this means that it is

# possible to lose up to 30 seconds of log in the worst scenario (with the

# default Linux settings).

#

# If you have latency problems turn this to "yes". Otherwise leave it as

# "no" that is the safest pick from the point of view of durability.

no-appendfsync-on-rewrite no

# Automatic rewrite of the append only file.

# Redis is able to automatically rewrite the log file implicitly calling

# BGREWRITEAOF when the AOF log size grows by the specified percentage.

#

# This is how it works: Redis remembers the size of the AOF file after the

# latest rewrite (if no rewrite has happened since the restart, the size of

# the AOF at startup is used).

#

# This base size is compared to the current size. If the current size is

# bigger than the specified percentage, the rewrite is triggered. Also

# you need to specify a minimal size for the AOF file to be rewritten, this

# is useful to avoid rewriting the AOF file even if the percentage increase

# is reached but it is still pretty small.

#

# Specify a percentage of zero in order to disable the automatic AOF

# rewrite feature.

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

# An AOF file may be found to be truncated at the end during the Redis

# startup process, when the AOF data gets loaded back into memory.

# This may happen when the system where Redis is running

# crashes, especially when an ext4 filesystem is mounted without the

# data=ordered option (however this can't happen when Redis itself

# crashes or aborts but the operating system still works correctly).

#

# Redis can either exit with an error when this happens, or load as much

# data as possible (the default now) and start if the AOF file is found

# to be truncated at the end. The following option controls this behavior.

#

# If aof-load-truncated is set to yes, a truncated AOF file is loaded and

# the Redis server starts emitting a log to inform the user of the event.

# Otherwise if the option is set to no, the server aborts with an error

# and refuses to start. When the option is set to no, the user requires

# to fix the AOF file using the "redis-check-aof" utility before to restart

# the server.

#

# Note that if the AOF file will be found to be corrupted in the middle

# the server will still exit with an error. This option only applies when

# Redis will try to read more data from the AOF file but not enough bytes

# will be found.

aof-load-truncated yes

# When rewriting the AOF file, Redis is able to use an RDB preamble in the

# AOF file for faster rewrites and recoveries. When this option is turned

# on the rewritten AOF file is composed of two different stanzas:

#

# [RDB file][AOF tail]

#

# When loading, Redis recognizes that the AOF file starts with the "REDIS"

# string and loads the prefixed RDB file, then continues loading the AOF

# tail.

aof-use-rdb-preamble yes

3. practice

1. config

/etc/redis/6379.conf

# 1. 前台运行,直接看日志

daemonize no

# 日志关掉

# logfile /var/log/redis_6379.log

# 2. 开启AOF

appendonly yes

# 清空日志

/var/lib/redis/6379/dump.rdb

/var/lib/redis/6379/appendonly.aof

2. alone_model

# AOF混合模式关闭

aof-use-rdb-preamble no

1. appendonly.aof

# 查看aof

vim appendonly.aof

127.0.0.1:6379> set k1 v1

OK

127.0.0.1:6379> set k1 v2

OK

# 合并aof

127.0.0.1:6379># BGREWRITEAOF

Background append only file rewriting started

*:指令由几个元素组成$:元素由几个字节组成

appendonly.aof

➜ 6379# cat appendonly.aof

*2

$6

SELECT

$1

0

*3

$3

set

$2

k1

$2

v1

*3

$3

set

$2

k1

$2

v2

# 清除aof垃圾,减少aof体积。bgrewriteaof

➜ 6379# cat appendonly.aof

*2

$6

SELECT

$1

0

*3

$3

SET

$2

k1

$2

v2

2. dump.rdb

# 同步执行

save

# 后台异步rdb

127.0.0.1:6379># bgsave

Background saving started

# 校验 dump.rdb

redis-check-rdb dump.rdb

- PID: 1620 => Background_pid 1686

1620:C 05 Apr 2023 10:42:10.929 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1620:C 05 Apr 2023 10:42:10.929 # Redis version=6.2.6, bits=64, commit=00000000, modified=0, pid=1620, just started

1620:C 05 Apr 2023 10:42:10.929 # Configuration loaded

1620:M 05 Apr 2023 10:42:10.930 * Increased maximum number of open files to 10032 (it was originally set to 8192).

1620:M 05 Apr 2023 10:42:10.930 * monotonic clock: POSIX clock_gettime

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 6.2.6 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1620

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | https://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

1620:M 05 Apr 2023 10:42:10.931 # Server initialized

1620:M 05 Apr 2023 10:42:10.931 * Ready to accept connections

1620:M 05 Apr 2023 10:57:11.009 * 1 changes in 900 seconds. Saving...

1620:M 05 Apr 2023 10:57:11.009 * Background saving started by pid 1686

1686:C 05 Apr 2023 10:57:11.011 * DB saved on disk

1620:M 05 Apr 2023 10:57:11.110 * Background saving terminated with success

# 查看rdb

vim dump.rdb

# 检查rdb文件

➜ 6379# redis-check-rdb dump.rdb

[offset 0] Checking RDB file dump.rdb

[offset 26] AUX FIELD redis-ver = '6.2.6'

[offset 40] AUX FIELD redis-bits = '64'

[offset 52] AUX FIELD ctime = '1680666771'

[offset 67] AUX FIELD used-mem = '1111952'

[offset 83] AUX FIELD aof-preamble = '0'

[offset 85] Selecting DB ID 0

[offset 104] Checksum OK

[offset 104] \o/ RDB looks OK! \o/

[info] 1 keys read

[info] 0 expires

[info] 0 already expired

dump.rdb

REDIS0009ú redis-ver^E6.2.6ú

redis-bitsÀ@ú^EctimeÂað,dú^Hused-mem°=^Q^@ú^Laof-preambleÀ^@þ^@û^A^@^@^Bk1^Bv1ÿBvxz<82><86>ÔK

3. mix_model

# AOF混合模式开启

aof-use-rdb-preamble yes

127.0.0.1:6379># set k1 v1

OK

127.0.0.1:6379># set k1 v2

OK

127.0.0.1:6379># set k1 v3

OK

127.0.0.1:6379># BGREWRITEAOF

Background append only file rewriting started

127.0.0.1:6379># set k2 v2

OK

127.0.0.1:6379># BGSAVE

Background saving started

# .aof文件 = 全量.rdb + 增量.aof

REDIS0009ú redis-ver^E6.2.6ú

redis-bitsÀ@ú^EctimeÂ<85>÷,dú^Hused-memÂ<90>÷^P^@ú^Laof-preambleÀ^Aþ^@û^A^@^@^Bk1^Bv3ÿM^HDíý8Ë<90>*2^M

$6^M

SELECT^M

$1^M

0^M

*3^M

$3^M

set^M

$2^M

k2^M

$2^M

v2^M

1962:C 05 Apr 2023 12:20:21.295 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1962:C 05 Apr 2023 12:20:21.295 # Redis version=6.2.6, bits=64, commit=00000000, modified=0, pid=1962, just started

1962:C 05 Apr 2023 12:20:21.295 # Configuration loaded

1962:M 05 Apr 2023 12:20:21.296 * Increased maximum number of open files to 10032 (it was originally set to 8192).

1962:M 05 Apr 2023 12:20:21.296 * monotonic clock: POSIX clock_gettime

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 6.2.6 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1962

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | https://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

1962:M 05 Apr 2023 12:20:21.297 # Server initialized

1962:M 05 Apr 2023 12:20:21.297 * DB loaded from append only file: 0.000 seconds

1962:M 05 Apr 2023 12:20:21.297 * Ready to accept connections

1962:M 05 Apr 2023 12:22:29.083 * Background append only file rewriting started by pid 2028 # 1. 开启AOF

1962:M 05 Apr 2023 12:22:29.107 * AOF rewrite child asks to stop sending diffs.

2028:C 05 Apr 2023 12:22:29.107 * Parent agreed to stop sending diffs. Finalizing AOF...

2028:C 05 Apr 2023 12:22:29.107 * Concatenating 0.00 MB of AOF diff received from parent.

2028:C 05 Apr 2023 12:22:29.107 * SYNC append only file rewrite performed

1962:M 05 Apr 2023 12:22:29.198 * Background AOF rewrite terminated with success

1962:M 05 Apr 2023 12:22:29.198 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

1962:M 05 Apr 2023 12:22:29.198 * Background AOF rewrite finished successfully

1962:M 05 Apr 2023 12:24:07.758 * Background saving started by pid 2046 # 2. 开启RDB

2046:C 05 Apr 2023 12:24:07.759 * DB saved on disk

1962:M 05 Apr 2023 12:24:07.824 * Background saving terminated with success